ChatGPT and the underlying AI technologies it represents evoke both fascination and concern. ChatGPT is the result of advances in mathematical research, machine power, and the proliferation of data on the internet. It represents a new technological revolution that is just beginning, with significant perspectives and challenges.

The Rise of Artificial Intelligence

It is generally accepted that the origins of artificial intelligence date back to the 1950s, but it is worth noting that the pioneering work of Alan Turing and his team of mathematicians in 1942 was an important precursor. They succeeded in deciphering the code of the German machine ENIGMA using a combination of computers and mathematical algorithms, thus laying the foundation for modern computer science and artificial intelligence.

The "Turing Test", also known as the "imitation game", involves three participants: a human judge and two participants: a human and a machine. The judge asks questions to both without knowing which one is the real human. Both (the human and the machine) try to convince the judge that they are "human". If the machine manages to deceive the judge into believing that it is human, it is considered to have passed the Turing Test.

For those who have interacted with ChatGPT, it is easy to see that this model easily passes the Turing Test. ChatGPT uses text-generation technology that can predict the probability of each word and paragraph to compose coherent and fluent text. This technology is based on intensive training on a large amount of data to perform complex tasks of natural language understanding.

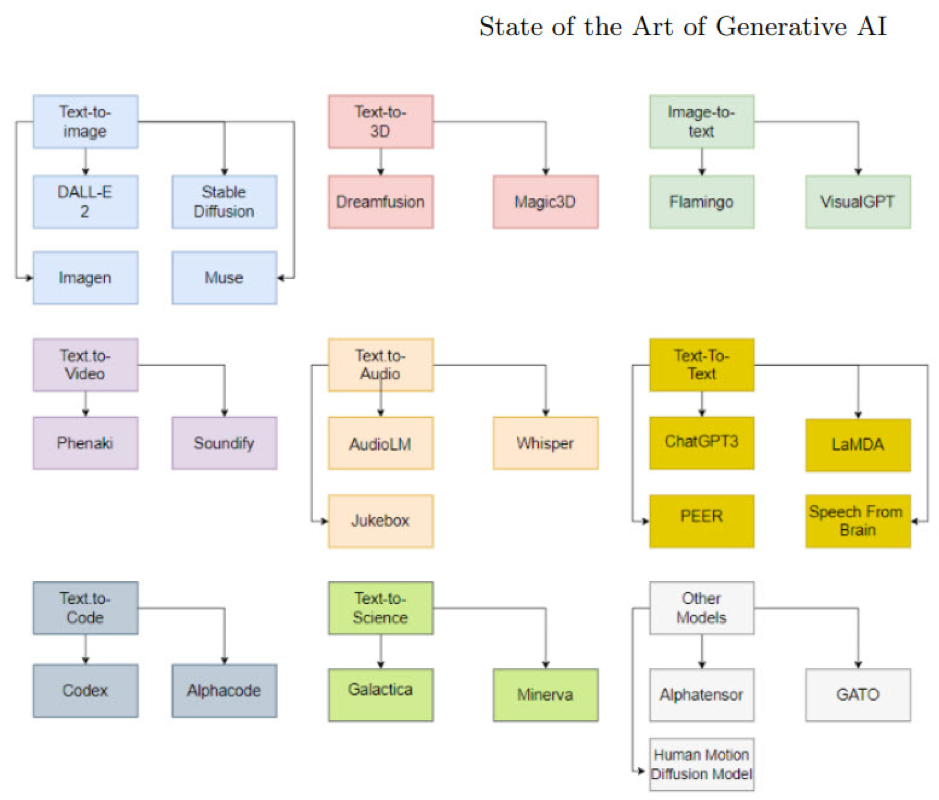

It is indeed important to note that several companies have already invested in the automated generation of various types of content, such as text, videos, 2D or 3D images, sounds, or even computer programs. Moreover, generative technology has also been used to produce scientific texts from a textual description of the desired content. The following table provides an overview of the considerable work already done thanks to this technology:

Figure 1 - source: https://arxiv.org/pdf/2301.04246.pdf

Artificial intelligence (AI) thus covers a wide variety of technologies and application areas. Here are some typical achievements obtained thanks to AI:

- The prediction of various variables, such as the price of a stock, the risk of fraud in a transaction, the next purchase of a customer, the content of an image or video, or the risk of developing a disease.

- The extension of human capabilities through the creation of virtual or augmented realities, such as online meetings reproducing the conditions of a real meeting using 3D imagery or the display of virtual screens to guide a troubleshooting agent in manipulating machines he knows little about.

- The use of connected objects, not only to the internet but also to the human body. This can take the form of watches equipped with sensors that detect the electrical signals emitted by the motor nerves passing through the wrist. These watches translate hand movements into commands to control the functions of a device (copy-paste, turn on or off remotely, for the most trivial examples).

These AI applications have already begun to transform many areas, including finance, healthcare, industry, education, entertainment, and many more.

Figure 2 - Source: Meta (Facebook)

AI, Biology, and Neuroscience

Another remarkable example of the use of AI is the creation of brain implants, electronic modules connected to very thin wires called "electrodes," which are inserted into the brain. These electrodes detect and record neuronal activity to stimulate neurons based on the signals received. This technology aims to restore mobility and communication in paralyzed individuals, treat neurological disorders such as Parkinson's disease or epilepsy, or improve cognitive and communication abilities.

Progress in this field is breathtaking, with brain implants that allow communication with a computer by thinking of words or using brain signals, and even the perception of tactile sensations through artificial fingers. Although this technology is still in development, it has the potential to transform the lives of many people with neurological diseases or communication disorders.

Figure 3 - credit: neuralink.com

Risks of Misuse

Despite the many positive applications of AI, there are also concerns about its potentially harmful uses. Here are some examples:

- Generative techniques can be used to create "deepfakes," which are videos manipulated to appear realistic but show events that never occurred. This can have negative consequences for the privacy, reputation, or security of individuals or organizations.

- Brain chip implants could potentially provide access to knowledge present on the internet, which could be used to cheat in competitions or exams. This could also have negative implications for privacy and personal data security.

- The military use and increasingly sophisticated and harmful cyberattacks with serious consequences for international security and stability.

Beyond these harmful uses, there are also concerns about the advent of "strong AI," which is an artificial intelligence that would have the ability to understand, learn, and autonomously apply its knowledge to a wide range of tasks and problems, similar to human intelligence. Some fear that this could lead to manipulation and control of the human species.

However, most experts believe that this danger is not imminent, as current AI is not capable of understanding the meaning of the words it manipulates. For example, it can isolate a dog and a cat in a photo and group them together under the general concept of "animals," but it does not understand the meaning or complexity of the concept of "animals" itself. Similarly, it can group a plane and a car under the concept of "means of transportation," but it does not understand the meaning or complexity of the concept of "transportation" itself. That said, it is important to continue to monitor and regulate the use of AI to minimize risks and maximize its benefits.

Processors, Data, and Talent

The first ideas that led to the development of deep learning date back to the 1950s, with the pioneering work of Marvin Minsky and John McCarthy who built one of the first neural machines. Since the 2000s, deep learning has seen explosive growth thanks to the explosion of computing power, the availability of massive amounts of data, and the human talent dedicated to creating new techniques for optimizing neural networks.

These three elements, equipment, massive data, and talent, are the subject of a race for AI development, mainly led by the United States and China, and to a lesser extent by the European Union. The economic, geopolitical, and military stakes are considerable and could change the world order, as AI gives a decisive advantage to those who exploit its most advanced versions.

The recent decisions taken by the Biden administration to restrict the sales of semiconductor manufacturing equipment and AI chips to China illustrate the ongoing stakes. The same Biden administration has taken certain measures to facilitate the entry of students in science, technology, engineering, and mathematics into the United States.

You cannot put progress on “pause”

It is unlikely that the recent call for a pause on AI development by Elon Musk will be accepted by the main players in the race for AI. To mitigate the risks of a dangerous drift for humanity, international organizations, and the scientific and software development communities would need to work together to establish regulations, or an ethical charter aimed at limiting potential abuses, as far as possible. However, the current global context and the heightened tensions between stakeholders in the race for AI are not encouraging for envisioning such a process.